Quick page benchmarks

I love optimising performance, be it in databases, scripts or webpages. It can be pretty evident in database queries when you’ve improved the performance, with total runtimes of scripts, etc.

Webpages, however, are a bit more tricky when the differences are relatively small. I’ll show you a quick way to run benchmarks that won’t require you to set up a lot of stuff in your framework but instead works with curl and hyperfine, a command-line benchmarking tool.

What are we testing?

So let’s first have a quick look at the code I want to demonstrate this with:

# The migration for our data

create_table :posts do |t|

t.string :title

t.string :tag

t.timestamps

end

class PostsController < ApplicationController

def index

@posts = Post.where(title: 'Faster is more fun', tag: 'ruby')

end

end

I filled up the database with 440000 records to play with.

I want to figure out whether adding an index to posts on :title and :tag will make the request faster. I am pretty sure it should.

The example is contrived, but let’s explore it anyway. The view looks like this:

<% @posts.each do |post| %>

<%= post.id %>

<% end %>

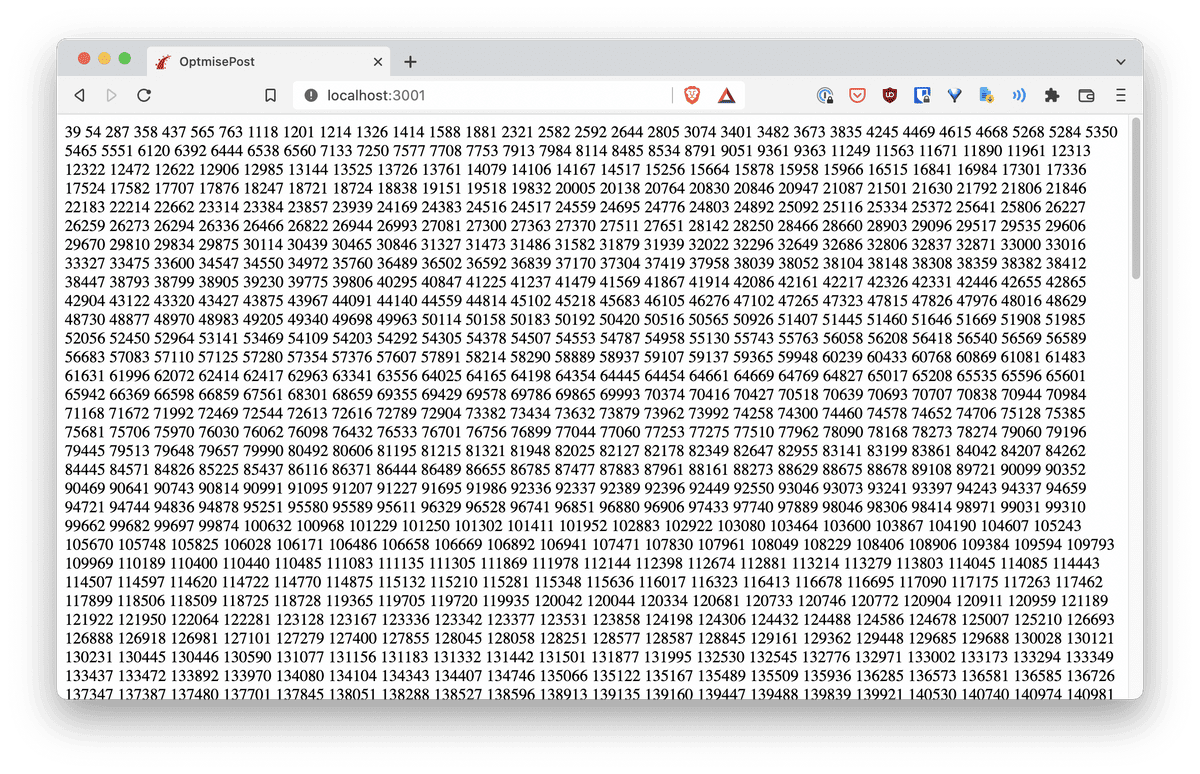

Which looks like this:

Imagine this is a webpage with some authorisation in front of it. How can we benchmark it? We might think of a capybara or rack test, which would work. We can hit it a few hundred times in a Benchmark block in Ruby. We’d then have to get the situation in the test suite right, sign in users, etc. Writing this sounds like a lot of work, but there is an easier way!

We will grab the request from the browser as a curl command, which will include all the cookies we need for authentication, and we don’t have to think about the URL; it will be in there already. The test data is in our development database, which we could also point to a follower or some other place with more data.

We will create a command line script to run the curl request and feed it into hyperfine to benchmark it.

We will then make our tweaks and run hyperfine again to see the ‘after’ result and validate that we made things better, not worse.

Ensure you have hyperfine installed: brew install hyperfine.

So the steps are:

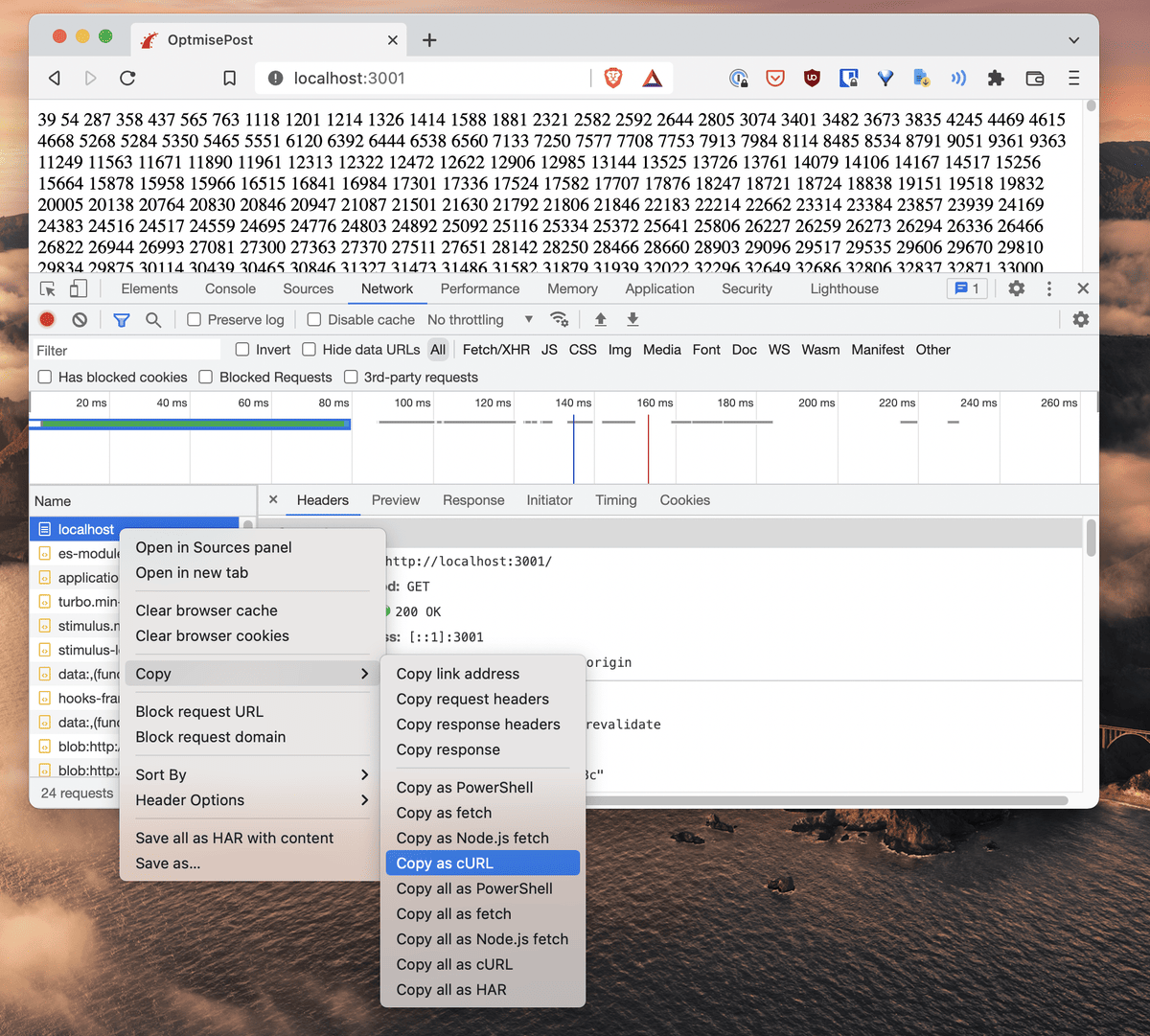

- Open up the development console

- Go to the Network tab

- Copy the request to the page as curl

- Paste it in a file called whatever you like, such as

benchmark.sh - Run

chmod +x benchmark.sh - Run hyperfine to capture the before-result:

hyperfine --warmup 10 --min-runs 100 ./benchmark.sh - Make our changes and rerun the benchmark to capture the after-result.

So:

Which looks like this:

curl 'http://localhost:3001/' \

-H 'Accept: text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9' \

-H 'Accept-Language: en-GB' \

-H 'Cache-Control: max-age=0' \

-H 'Connection: keep-alive' \

-H 'Cookie: _...' \

-H 'If-None-Match: W/"2474ec9d05466c6ad84b819e7221085b"' \

-H 'Sec-Fetch-Dest: document' \

-H 'Sec-Fetch-Mode: navigate' \

-H 'Sec-Fetch-Site: same-origin' \

-H 'Sec-Fetch-User: ?1' \

-H 'Sec-GPC: 1' \

-H 'Upgrade-Insecure-Requests: 1' \

-H 'User-Agent: Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/102.0.5005.61 Safari/537.36' \

--compressed

After making the script executable, we can run hyperfine:

hyperfine --warmup 10 --min-runs 100 ./benchmark.sh

The flag --warmup 10 will first run the request 10 times without measuring. I do this to wake up Rails and the database server a bit. --min-runs 100 will ensure we fire at least 100 requests for our benchmark to have some statistical significance.

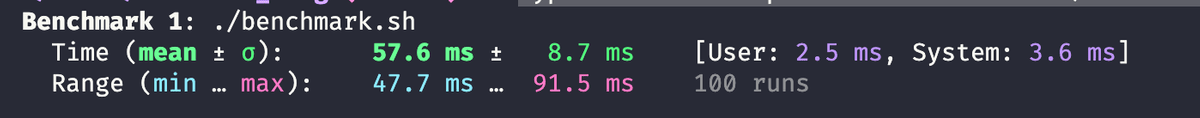

The result looks as follows:

Or:

Benchmark 1: ./benchmark.sh

Time (mean ± σ): 57.6 ms ± 8.7 ms [User: 2.5 ms, System: 3.6 ms]

Range (min … max): 47.7 ms … 91.5 ms 100 runs

So we can see that without an index, our request has a mean time of 57.6ms.

Let’s add an index and see if it makes it quicker.

-- We run this on the database:

CREATE INDEX index_posts_title_tag ON posts (title, tag);

Now we rerun hyperfine:

Benchmark 1: ./benchmark.sh

Time (mean ± σ): 29.5 ms ± 7.2 ms [User: 2.4 ms, System: 3.4 ms]

Range (min … max): 23.4 ms … 61.8 ms 100 runs

Impressive! Twice as fast! But more importantly, we have validated our improvement!

Note 1: Be sure to have a lot of data!

There is no point in trying this with just 10 posts. With 10 posts, before the index:

Benchmark 1: ./benchmark.sh

Time (mean ± σ): 18.4 ms ± 5.9 ms [User: 2.4 ms, System: 3.4 ms]

Range (min … max): 12.8 ms … 56.3 ms 146 runs

After the index:

Benchmark 1: ./benchmark.sh

Time (mean ± σ): 15.6 ms ± 2.1 ms [User: 2.4 ms, System: 3.3 ms]

Range (min … max): 13.2 ms … 25.8 ms 157 runs

There is some difference, but it is much more visible with more data.

Note 2: This was a back-end test

Curl will only fetch the HTML, and that is it. It won’t load the HTML, extra resources, etc. So this is a back-end test. If you want to test the whole loading of all the front-end assets, etc., you will have to resort to some different command.

I have not looked into the whole cookie copy-ing to make sure I am still signed in on the page I am testing, but running a headless chrome instance can be done like so:

# First run this to have an easy reference to `chrome` if you are on mac:

alias chrome="/Applications/Google\ Chrome.app/Contents/MacOS/Google\ Chrome"

Call pages in the headless browser:

chrome --headless --disable-gpu --dump-dom http://localhost:3001/

Put the chrome --headless... command in the benchmark.sh script, and you can use the same basic principles above.

Conclusion

I’ve found this benchmarking method handy because it is quick to do, and the results are pretty reliable.

In my early past, I’ve tried to sort of blindly optimise things and hope for the best in production. Then in production, it is tough to figure out whether you improved performance by 10% or if there is a difference due to other factors such as server load. Doing a benchmark on your computer is relatively controlled, and it is easier to attribute differences to specific changes you made.

You can use this concept for any framework, which makes it very portable.